Building Images

Table of contents

Dockerfile

We previously used an existing image created by linuxserver. Now, we’re going to make our own.

When creating a Docker image, each step in the build process creates a ‘layer’.

This is beneficial as common layers will only be downloaded once. This results in images being downloaded faster and taking up less space.

-

Create a file called

DockerfileWhen building an image, this is the default name that is used. - Add the following line:

FROM ubuntuMost images have a base image that they will build on top of. In our case, we’re building on top of ubuntu.

Since we haven’t specified a tag, it will default toubuntu:latest.

In most production environments, it is best to explicity specify which tag to use as relying onlatestcan result in dependency issues. But for this project, that won’t be necessary. - Add the following lines:

RUN apt-get update RUN apt-get install -y curlThe

RUNinstruction will cause the shell to execute the provided commands. This is one way we’re able to manipulate the base image.There are two forms for the

RUNcommand:

1)RUN <command>- shell form, which will run the commands with/bin/sh -c

2)RUN ["executable", "param1", "param2"]- exec form, in which you can specifiy what the commands will run withAs an example,

apt-get updatecould be typed as follows:RUN ["/bin/sh", "-c", "apt-get", "update"]However, the first variation is the most commonly used.

- Add the following lines:

VOLUME [ "/downloads" ]The

VOLUMEinstruction will create a mount point. This is the preferred mechanism for persisting data.

This will create the directory/downloadsinside the container. If we do not provide a-varugment when running the container, Docker will automatically mount it for us. - Add the following line:

WORKDIR /downloadsThe

WORKDIRinstruction sets the working directory for the instructions that will follow it. - Add the following line:

CMD ["/usr/bin/curl", "-O", "https://raw.githubusercontent.com/jaketreacher/docker-media-stack/master/README.md"]The

CMDinstruction provides the default execution for a running container. There can be only oneCMDinstruction. If more than one is provided, only the last will be used.The command we’ve provided will download the

README.mdfile from themasterbranch of this project. Not exactly the most useful task - but don’t worry, we’ll be changing it soon!Similarly to

RUN,CMDcan be described in both an shell form and an exec form. The exec form that we’ve used is the most common forCMD, whereas shell is most common forRUN.Our Dockerfile should look like this:

FROM ubuntu RUN apt-get update RUN apt-get install -y curl VOLUME [ "/downloads" ] WORKDIR /downloads CMD [ "/usr/bin/curl", "-O", "https://raw.githubusercontent.com/jaketreacher/docker-media-stack/master/README.md" ] - Build the image by running the following command:

docker build -t tutorial/curl .The

-targument will set the tag in the formatname:tag. Given we haven’t explicity specified a:tagit will default to:latest.

The.is the build context - this is the directory that will be sent into the docker daemon.You will see the output of each step during the build process.

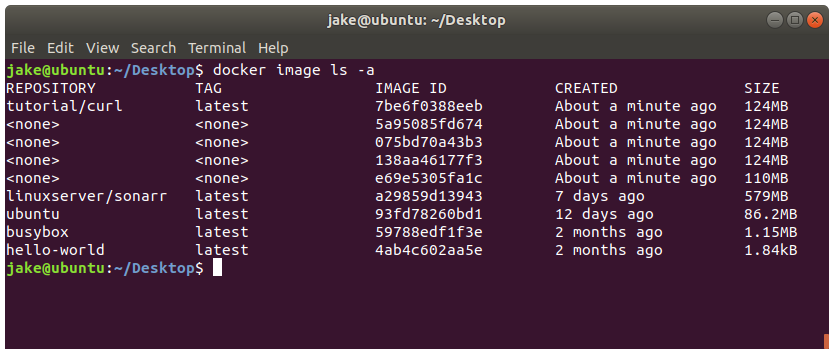

- List your images:

docker image ls -a We should see our image

We should see our image tutorial/curlin addition to four blank images. This is because each instruction in the Dockerfile will create a new image. If we were to build again, it will use these cached images. Modifications in the Dockerfile will result in only the subsequent steps building a new image.Something to keep in mind is that if we were to build this image again, the apt cache would not be up-to-date as Docker will use the previously cached image. If you want to force Docker to rebuild everything, simply add the

--no-cacheargument. - Run our image:

docker run -it --rm -v $(pwd)/downloads:/downloads tutorial/curlRunning this image will:

1) create a newdownloadsdirectory in our current working directory;

2) run thecurlcommand inside the container; and

3) downloadREADME.md.Take note that any directories that Docker creates will be owned by the root user and may result in permission conflicts.

Challenge

Why does the container stop after

curlfinishes the download?Solution

CMDwill assign a process to PID 1. Once PID 1 ends, the container will exit.

Upgrade to Sonarr

-

Create a Dockerfile to build a Sonarr image

You’re on your own! Attempt to use what we’ve discussed to create an image that will run Sonarr.Here are some useful tips:

• Sonarr requires Mono to run (check for a Mono base image)

• Installation instructions here

• Mono:/usr/bin/mono

• Sonarr:/opt/NzbDrone/NzbDrone.exe

• You should have two volumes,/mediaand/downloads

• RunNzbDronewith--nobrowser

Solution

FROM mono RUN apt-key adv --keyserver keyserver.ubuntu.com --recv-keys 0xFDA5DFFC && \ echo "deb http://apt.sonarr.tv/ master main" | tee /etc/apt/sources.list.d/sonarr.list && \ apt-get update && \ apt-get install nzbdrone -y VOLUME [ "/media", "/downloads" ] EXPOSE 8989 CMD ["/usr/bin/mono", "/opt/NzbDrone/NzbDrone.exe", "--nobrowser"]It’s a lot easier than expected – I’ve pretty much just copied the instructions from the official documentation while stripping any use of

sudo. Commands inside of docker will run as the root user.Since each instruction will create a new layer, I’ve opted to combine

RUNinto a single step. For a production image, this is the preferred method as it will make the image smaller.You may also notice I’ve included

EXPOSE. This serves as a type of documentation between the person who builds the image and the person who runs the container, and will appear when inspecting an image. Although this won’t publish the port, it is good practice to include this.

- Build our image:

docker build -t tutorial/sonarr . - Run our image:

docker run --rm -d -p 8989:8989 \ -v $(pwd)/media:/media \ -v $(pwd)/downloads:/downloads \ -v $(pwd)/config:/root/.config/NzbDrone \ tutorial/sonarr

Multiple Processes

The Docker developers advocate the philosophy of running a single logical service per container.

A logical service can consist of multiple OS processes. For example, if you had a webapp and a database, they are two different services so you wouldn’t run them in the same container.

Deluge is a single service that requries two components: the daemon deluged, and the web-ui deluge-web.

Given the Dockerfile will only accept a single CMD instruction, the way to run multiple processes is to create a bash script to act as a supervisor. However, this is outside of the scope of this tutorial so instead we will use a base image that already has this configured.

The two popular images for this are:

1) phusion/baseimage; and

2) just-containers/s6-overlay.

Linuxserver uses the latter for their images, but I found the former to be easier to configure. So we’ll continue with that.

Deluge Dockerfile

-

Create a new directory called

runit

The name is not important but givenphusion/baseimageutilises runit for service supervision, it seems fitting. - Create

runit/deluged.shwith the following content#!/bin/sh exec /usr/bin/deluged -c /config -d --loglevel=info -l /config/deluged.log - And another

runit/deluge-web.sh#!/bin/sh exec /usr/bin/deluge-web -c /config --loglevel=info - Ensure our scripts are executable

sudo chmod +x runit/* - And finally

deluge.dockerfileFROM phusion/baseimage COPY runit /etc/sv/ RUN apt-get update && \ apt-get install -y deluged deluge-web && \ mkdir -p /etc/service/deluged && \ ln -s /etc/sv/deluged.sh /etc/service/deluged/run && \ mkdir -p /etc/service/deluge-web && \ ln -s /etc/sv/deluge-web.sh /etc/service/deluge-web/run VOLUME [ "/config", "/downloads" ] EXPOSE 8112You may have noticed a new instruction,

COPY. This will copy the contents of ourrunitdirectory into the/etc/sv/directory of the container.The default scripts provided by

phusion/baseimagewill scan all directories in/etc/serviceand execute the containingrunfiles. Each directory should be named after it’s corresponding process.Looking at our above dockerfile, you can see that we’ve created the following soft links:

/etc/service/deluged/run --> /etc/sv/deluged.sh /etc/service/deluge-web/run --> /etc/sv/deluge-web.sh - Build the image

docker build -f deluge.dockerfile -t tutorial/deluge .Given our dockerfile isn’t named

Dockerfile, we specific the name with the-fargument. - Run

docker run --rm -d -p 8112:8112 tutorial/delugeThe default port for deluge-web is 8112.

Open your browser to

http://localhost:8112to confirm it is working.

We now have two services that area able to interact with each other. However, starting them can be a tedious process. In the next part of the tutorial, we’ll look at using a docker-compose file to define our environment.